A quick intro into the wonderful world of Snowflake’s Snowpark for Python!

While SQL is the most popular data manipulation language in the world, it does have its fair share of limitations. Things like advanced data analytics, machine learning, data visualizations, and API usage are all examples of things better handled by other programming languages. Snowflake’s Snowpark aims to address these shortcomings by enabling users to write and execute other programming languages inside your Snowflake environment, making full use of Snowflake's compute power while significantly extending Snowflake’s capabilities. This blog will focus specifically on Snowpark for Python and offers a brief overview of what Snowpark is, how it works, and a quick starter’s guide to get you going with Snowpark.

What is Snowpark and why should you care?

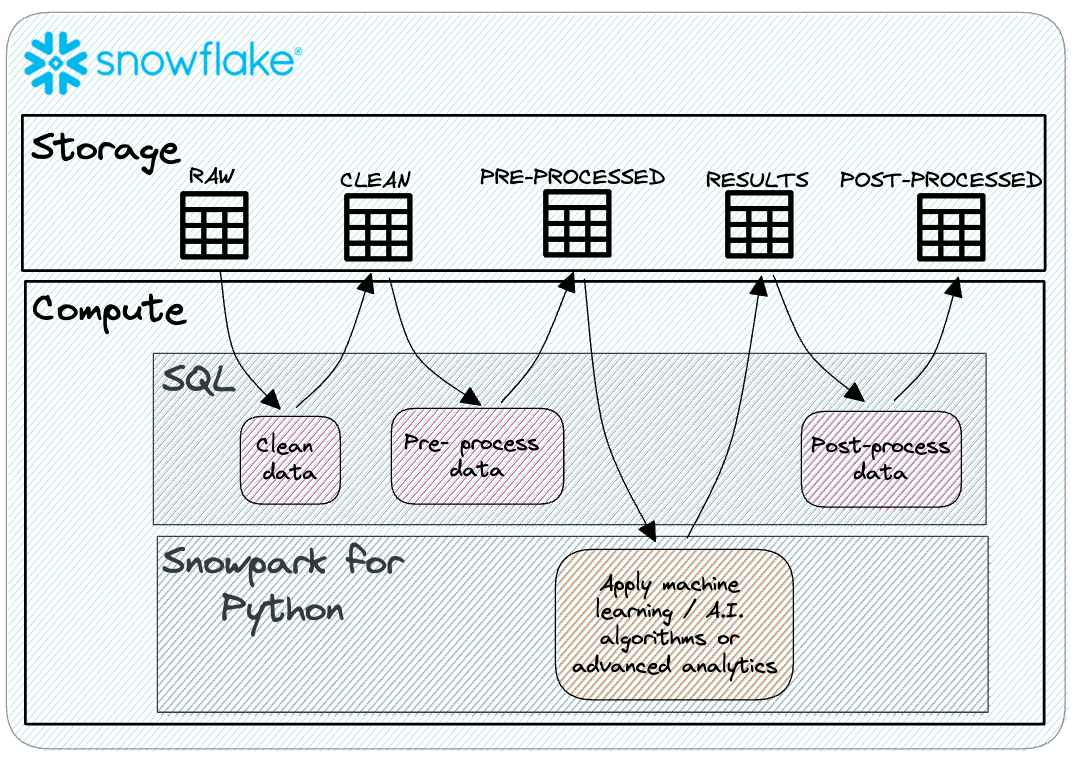

Conceptually, Snowpark is a developer-centric feature in the form of an API that allows you to write custom code in programming languages like Python, Scala, and Java. As mentioned in the introduction, SQL is optimized to do one thing and do it extremely well: querying data. Beyond that, SQL tends to come in short as more complex operations are difficult or even impossible. Snowpark encourages developers to adopt a so-called polyglot data processing approach, in which you fully leverage the strengths of multiple programming languages while complementing their weaknesses. As an example, developers can use SQL for efficient data querying and data transformations while delegating more complex tasks like running machine learning algorithms to languages such as Python. The figure below shows what such a data transformation flow would look like.

Snowpark also seamlessly integrates with Snowflake's data storage and processing capabilities, leveraging Snowflake’s powerful compute engine and removing the need to move data outside of the Snowflake environment.

Another useful benefit of Snowpark is access to a rich ecosystem of third-party libraries. Since Snowpark’s inception, Snowflake has partnered up with the world’s most popular Python distributor: Anaconda. This collaboration brings enterprise-grade open-source Python innovation to the data Cloud, making third-party libraries more accessible than ever right from your Snowflake environment.

It should also be mentioned that Snowflake provides special Snowpark optimized warehouses which provide 16 times the memory per node compared to standard Snowflake warehouses, specifically to address the high memory requirements of machine learning training. Simply alter the warehouse type to “SNOWPARK-OPTIMIZED” to create such a warehouse. However, do note that these warehouse types are not supported for sizes smaller than Medium.

How do I use Snowpark?

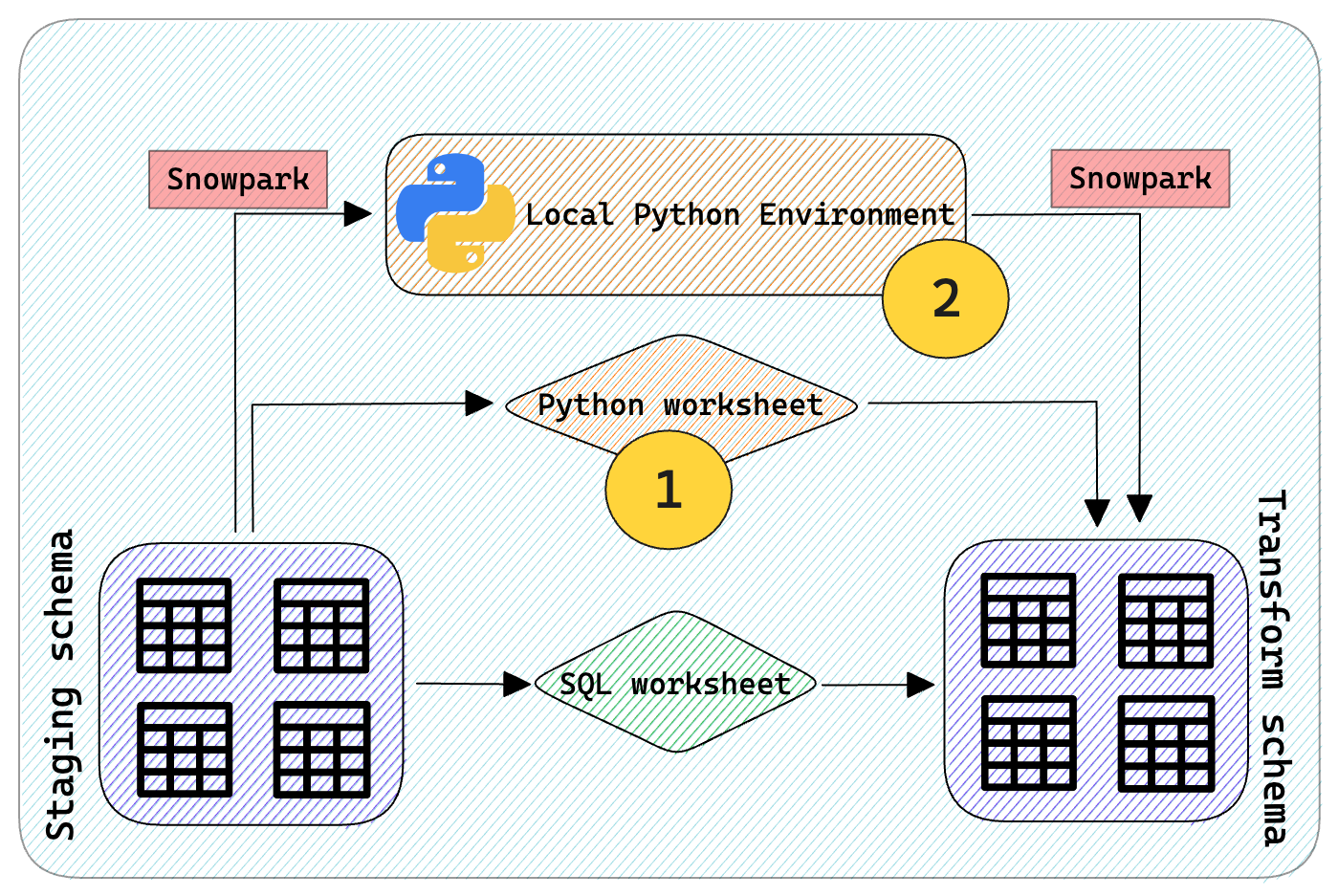

In practice, there are generally two options to use Snowpark:

- Write Python code in a dedicated Python worksheet right in your Snowflake environment. These Python worksheets are accessible via the new Snowflake UI and will provide you with a small sample upon which you can start building.

- Write Python code locally in a .py file while using the Snowpark API to connect from your local machine to your Snowflake account. Use Snowpark functionality to fully leverage Snowflake's compute and prevent data leaving the data warehouse.

To the veterans among us, the second option sounds a lot like the way you would use the old Snowflake Connector for Python. However, Snowpark for Python has been designed to enable connectivity between the client’s side and Snowflake in a much more advanced way. While the old connector did allow developers to execute SQL commands, Snowpark enables more complex use cases such as the ability to run UDF’s, UDTF’s, stored procedures, and many more.

However, as with all things related to coding, we tend to learn best by doing it ourselves. In the next section, we will show how to put option 2 into practice and go through the steps of setting up your own Snowpark environment. We will also provide some basic code snippets to get you started on your own Snowpark adventure.

Starter’s Guide to Snowpark for Python

We will assume that you already have Python installed on your machine.

First, you need to create a Python 3.8 virtual environment. You can use tools like Anaconda, Miniconda or Virtualenv to achieve this. Note that Snowpark API requires Python 3.8 or higher.

Next, you’ll have to install the Snowpark package. Run the following command:

pip install snowflake-snowpark-python

You can also install specific packages by doing the following:

pip install "snowflake-snowpark-python[pandas]"

Let’s get coding. Open your IDE of choice and fill in the connection parameters in the code snippet provided below. Running the block will create a new Snowpark Session.

from snowflake.snowpark.session import Session

connection_parameters = {

"account": account,

"user": login_name,

"password": password,

"role": role,

"warehouse": warehouse,

"database": database,

"schema": schema

}

def create_session_object(connection_parameters):

current_session = Session.builder.configs(connectiion_parameters).create()

return current_session

current_session = create_session_object(connection_parameters)

If everything goes well, you should be connected to your Snowflake account. To verify this, we can “query” a table of your choosing as follows:

dataframe = current_session.table(f"{database}.{schema}.{table}")

dataframe.show()

If this worked as expected, you now have successfully created a new Snowpark for Python environment. You may now embark on your own Snowpark adventure!

Conclusion

In summary, Snowpark is a handy (arguably crucial) feature that allows developers access to other programming languages to expand the functionality of their code. The main benefits include:

- Develop code locally in a familiar environment;

- Fully leverage the scalable and elastic compute resources provided by Snowflake upon running your code;

- Access to a rich ecosystem of third-party libraries.